The debate around the correlation between behavioral metrics and rankings has been on for a few years at least. If you think about it, the topic seems to be pretty uncontroversial — after all, that’s a yes or no question. Then why are opinions on it so divided?

The problem is, what Google representatives have said on the issue has been surprisingly inconsistent. They confirmed it, and they denied it, and they confirmed it again, but most often, they equivocated.

Here’s a little thought experiment: for a moment, let us assume that user behavior is not a ranking signal. Can you think of a reason why Google employees would often publicly say the opposite?.. (You’ll find some pretty straightforward quotes later on in this article.)

Now, let’s assume for a second that Google does consider user metrics in its ranking algorithms. Why wouldn’t they be totally open about it? To answer that question, I’d like to take you back to the days when links largely determined where you ranked. Remember how everyone did little SEO apart from exchanging and buying backlinks in ridiculous amounts? Until Penguin and manual penalties followed, of course.

With that experience in mind, Google probably doesn’t have much faith left in the good intentions of the humankind — and it’s very right not to. Revealing the ins and outs of their ranking algorithms could be an honorable thing to do, but it would also be an incentive for many to use this information in a not-so-white-hat way.

If you think about it, Google’s aim is a very noble one — to deliver a rewarding search experience to its users, ensuring that they can quickly find exactly what they’re looking for. And logically, the implicit feedback of Google searchers is a brilliant way to achieve that. In Google’s own words:

In this article, you’ll find the evidence that Google uses behavioral metrics to rank webpages, the 3 most important metrics that can make a real SEO difference, and real-life how-tos on applying that knowledge to improve your pages’ rankings.

The evidence

Using behavioral metrics as a ranking factor is logical, but it’s not just common sense that’s in favor of the assumption. There’s more tangible proof, and loads of it.

1. Google says so.

While some Google representatives may be reluctant to admit the impact of user metrics on rankings, many aren’t.

In a Federal Trade Commission court case, Google’s former Search Quality chief, Udi Manber, testified the following:

“The ranking itself is affected by the click data. If we discover that, for a particular query, hypothetically, 80 percent of people click on Result No. 2 and only 10 percent click on Result No. 1, after a while we figure probably Result 2 is the one people want. So we’ll switch it.”

Edmond Lau, who also used to work on Google Search Quality, said something similar:

“It’s pretty clear that any reasonable search engine would use click data on their own results to feed back into ranking to improve the quality of search results. Infrequently clicked results should drop toward the bottom because they’re less relevant, and frequently clicked results bubble toward the top.”

Want more? Back in 2011, Amit Singhal, one of Google’s top search engineers, mentioned in an interview with Wall Street Journal that Google had added numerous “signals,” or factors into its algorithm for ranking sites. “How users interact with a site is one of those signals”.

2. Google’s patents imply so.

While Google’s employees can equivocate about the correlation between user metrics and rankings in their public talks, Google can’t omit information like this in their Patents. It can word it funny, but it can’t omit it.

Google has a whole patent dedicated to modifying search result ranking based on implicit user feedback.

There are a bunch of other patents that mention user behavior as a ranking factor. In those, Google clearly states that along with the good old SEO factors, various user data can be used by a so-called rank modifier engine to determine a page’s position in search results.

3. Real-life experiments show so.

A couple of months ago, Rand Fishkin of Moz ran an unusual experiment. Rand reached out to his Twitter followers and asked them to run a Google search for best grilled steak, click on result No.1 and quickly bounce back, and then click on result No. 4 and stay on that page for a while.

Guess what happened next? Result No.4 got to the very top.

Coincidence? Hmm.

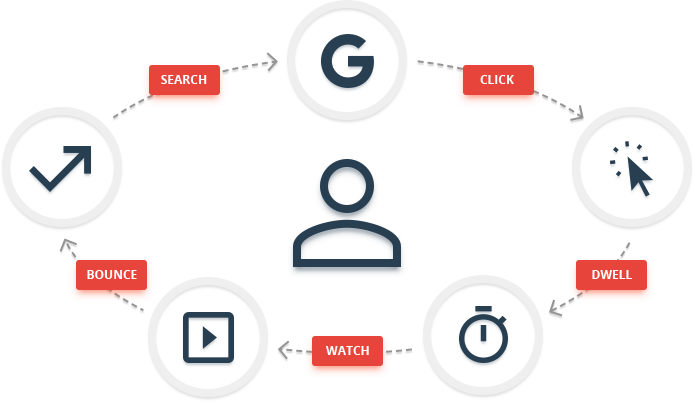

The 3 user metrics that make a difference

Okay, we’ve figured out that user behavior can affect your rankings — but what metrics are we talking about exactly?

1. Click-through rate

1. Click-through rate

It is clear from numerous patents filed by Google that they collect and store information on click-through rates of search results. A click-through rate is a ratio of the number of times a given search listing was clicked on to the number of times it was displayed to searchers. There isn’t, of course, such a thing as a universally good or bad CTR, and many factors that affect it are not directly within your control. Google is, of course, aware of them.

To start with, there’s presentation bias. Clearly, SERP CTR varies significantly for listings depending on where they rank in search results, with top results getting more clicks.

Secondly, different click-through rates are typical for different types of queries. For every query, Google expects a CTR in a certain range for each of the listings (e.g. for branded keywords, the CTR of No.1 result is around 50%; for non-branded queries, the top result gets around 33% of clicks). As we can see from Rand Fishkin’s test mentioned above, if a given listing gets a CTR that is seriously above (or below) that range, Google can re-rank the result in a surprisingly short time span.

One other thing to remember is that CTR affects rankings in real time. After Rand’s experiment, the listing users were clicking and dwelling on eventually dropped to position No. 4 (right where it was before). This shows us that a temporary increase in clicks can only result in a temporary ranking improvement.

2. Dwell time

2. Dwell time

Another thing that Google can use to modify rankings of search results, according to the patents, is dwell time. Simply put, dwell time is the amount of time that a visitor spends on a page after clicking on its listing on a SERP and before coming back to search results.

Clearly, the longer the dwell time the better — both for Google and for yourself.

Google’s patent on modifying search results based on implicit user feedback says the following user information may be used to rank pages:

3. Pogo-sticking

3. Pogo-sticking

Google wants searchers to be satisfied with the first search result they click on (ideally, the No.1 result). The best search experience is one that immediately lands the searcher on a page that has all the information they are looking for, so that they don’t hit the back button to return to the SERP and look for other alternatives.

Bouncing off pages quickly to return to the SERP and look for another result is called pogo-sticking.

All of this affects your website’s overall quality score

OK, this one is really big: good performance is terms of clicks and viewing times isn’t just important for the individual page (and query) you are going after. It can impact the rankings of your site’s other pages, too.

Google has a patent on evaluating website properties by partitioning user feedback. Simply put, for every search somebody runs on Google, a Document-Query pair (D-Q) is created. Such pairs hold the information about the query itself, the search result that was clicked on, and user feedback in relation to that page. Clearly, there are trillions of such D-Qs; Google divides them into groups, or “partitions”. There are thousands of “partition parameters”, e.g. the length of the query, the popularity of the query, its commerciality, the number of search results found in Google in response to that query, etc. Eventually, a single D-Q can end up in thousands of different groups.

“So what?”, you might reasonably ask. The reason why Google is doing all of this mysterious partitioning is to determine a certain property of a website, such as its quality. This means that if some of your pages perform poorly in certain groups in comparison to other search results, Google could conclude that it is not a high-quality site overall, and down-rank many — or all — of its pages. The opposite is also true: if some of your pages perform well compared to competitors’, all of your sites’ pages can be up-ranked in search results.

How-to: Optimize user metrics for better rankings

The three metrics above are a must to track and improve on. Offering a great user experience to visitors is a win-win per se; as a sweet bonus, it can also improve your organic rankings.

1. Boost your SERP CTR

The snippet Google displays for search results consists of a title (taken from the page’s title tag), a URL, and a description (the page’s meta description tag).

- Find pages with poor CTR

Once you’re positive your meta description and title meet the technical requirements, it’s time to go after the CTR itself. The first thing you’d want to do is to log in to your Google Webmaster Tools account and go to the Search Analytics report. Select Clicks, Impressions, CTR, and Position to be displayed:

While CTR values for different positions in Google SERPs can vary depending on the type of the query, on average, you can expect at least 30% of clicks for a No.1 result, 15% for a No.2 result, and 10% for a No.3 result. Here’s a graph from Chitika’s CTR study:

If the CTR for some of your listings is seriously below these averages, these could be the problem listings you’d want to focus on in the first place.

- Make sure your title and meta description meet the tech requirements

If you’re just starting at optimizing your listings’ organic click-through rate, the first thing you need to do is make sure that all your titles and meta descriptions are in line with SEO best practices.

One thing to look out for is duplicate titles and descriptions. Although not directly user behavior-related, duplicated meta tags can get Google confused about which of your pages to rank for a given query (as a result, it could decide to rank none).

Remember to also check for any empty tags or titles and descriptions that are too long (in this case, they’ll be truncated by Google’s SERPs and you won’t get your message across).

- Rewrite your titles and descriptions

There’s no universal formula on creating a click-inspiring search listing, but you should start with the nature of the query and the semantics of it. Are searchers researching a product or a service? Are they looking for information or solutions to problems? Would they prefer a brief answer or an exhaustive one? In a sense, you need to get into the head of the searching user — and come up with a result that they’d want to click.

In the title, try to keep your keywords closer to the beginning — studies show that searchers skimming through SERPs only read the first 20 characters of a listing, so it’s a good idea to ensure that the search term is within those first 20 characters.

Keep the URL clear and easy to read. For longer URLs, consider using breadcrumbs — Google’s alternative way of displaying a page’s location in the site hierarchy.

In your listing’s description, use a strong call to action. Instead of simply describing what your page is about, address the searcher and let them know about the benefits of navigating to your page, choosing your product, etc.

- Consider using rich snippets

To make your listing stand out from the crowd, one great thing to do is use structured data markup to enable rich snippets. Rich snippets can be used for different kinds of content, such as products, recipes, reviews, and events.

Google’s rich snippets can include images, ratings, and other industry-specific pieces of data.

You can always preview your snippets by copying and pasting your page’s source code into Google’s Testing Tool.

2. Increase dwell time and avoid pogo-sticking

Once you’ve optimized your SERP CTR, you’ll need to make sure the people who click on your listing are satisfied with the page and stick around your site for a while.

- Optimize page speed and usability

First off, check your page against any possible usability issues. Factors like poor page speed and distracting pop-ups or ads will cause many visitors to bounce right back to search results.

Have a look at Page Speed (Desktop) and Page Usability (Mobile), and make sure no errors and warnings are found here.

- Look out for broken links

One logical way to increase dwell time is by encouraging further exploration of the site. Make sure your internal linking is intuitive and logical, and regularly make sure none of your links are dead.

Include links to related content

If you’re optimizing a page with a blog post or any other piece of content, adding a Related Articles section to the page is a good way to keep searchers on your site longer. You can do this manually by including the relevant links at the end of the post, or use this WordPress plugin to automatically add related posts in the footer of every piece of content you publish.

Similarly, for product pages on e-commerce sites, a Related Products section will entice users to go deeper into your site (and potentially order more from you). It’s also a great way of preventing bounces from pages for products that are out of stock instead of just telling the visitor bluntly that you don’t have what they are looking for.

- Enable site search

The best way to make sure visitors can find what they’re looking for on your site is to give them a search option. The easiest way to do that is through Google’s custom search engines; this way, you’ll also be able to track what people search for on your site through Google Analytics, identify trends, and perhaps include some direct links to the pages they are looking for.

- Make your 404s useful

If some of your pages go 404, Google won’t know about it immediately; it may continue to display those pages in search results for a while. Clearly, bounces from 404s are inevitable; but you can keep some of your visitors on your site by making the page useful. Consider including links to things they might be looking for, or even adding a search bar so they can find those things themselves.

- Create compelling content

You’ve sure heard this one before, but: you couldn’t possibly leave you visitors satisfied even if you follow all the tips above and ignore this one. Apart from ensuring that your content is useful and unique, make sure your page gives searchers what they are looking for — whatever that is. With your content, you need to deliver what your Google snippet promised and fulfill the searcher’s conscious and unconscious needs.

A good starting point is to think of queries as steps in certain “tasks”. Say, “social media management software reviews” would be a logical first step in the task of purchasing SMM software. When you can, try to think a few steps ahead and offer searchers solutions to problems they haven’t put into queries yet.

There’s almost an element of mind reading in it. But who said SEO was easy?

Before we go…

Please don’t try to manipulate these metrics.

Google’s well aware of the techniques that can be used to artificially inflate CTR and dwell time, and it’s very good at detecting them. While Rand Fishkin’s tests with human participants have shown impressive results, experiments that used bots to manipulate user data failed to show any. Google stores lots of information on every individual searcher, including searching and browsing history. Google’s just too smart to let the feedback of a bot — a searcher with no history, or one with a history that does not look natural — mess with the search results.

I started my first web marketing campaign in 1997 and continue harvesting this fruitful field until today. I helped many startup and well-established companies to grow by applying innovative inbound marketing strategies. In 2001 I designed and managed development of the first automated Search Marketing Management System that opened a whole new era in web marketing automation. For the past 8 years I have been a Head of Marketing for a digital advertising agency, based in Canada. There, I founded the new Search Marketing Department which served over a hundred clients worldwide and tripled the Company revenue in the last 4 years. Currently, I live in Montreal, Canada. With the accumulated 24-year experience in all aspects of digital marketing and communications, I am ready to contribute all that powerful mix of skills and knowledge to your Business now - without a delay!

About us and this blog

We are a digital marketing company with a focus on helping our customers achieve great results across several key areas.

Request a free quote

We offer professional SEO services that help websites increase their organic search score drastically in order to compete for the highest rankings even when it comes to highly competitive keywords.